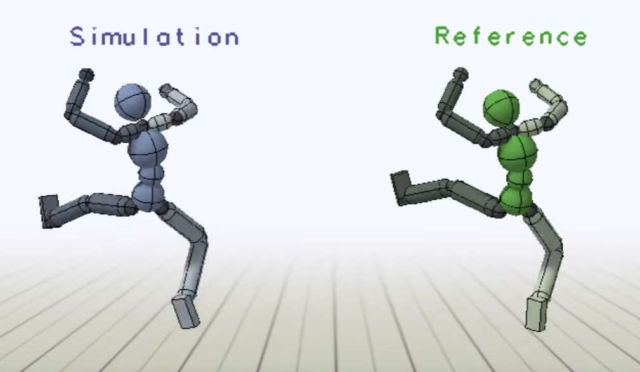

It can kick, punch, and even flip. UC Berkeley researchers created a virtual stuntman that could make computer animated characters more lifelike.

Named “DeepMimic,” researchers fed motion-capture data of different skills to a computer… and a simulated character practiced each skill for a month. Through deep reinforcement learning, the simulated character ran millions of trials…constantly comparing its performance to the motion-capture data… until it learned each skill…

Current deep reinforcement learning methods often result in unrealistic behaviors… the Berkeley team created one general algorithm, allowing its characters to accurately reproduce a large variety of skills.

Which could make it easier to make video game physics even more realistic. The algorithm also enables the character to adapt to changes in its environment, like… adjusting for difficult terrain, and hitting random targets.

The character learned 25+ acrobatic moves… including dancing. This work also allows characters to learn from artist-created animations, enabling non-human characters like… A lion. T-rex. And did we mention they have a dragon?

More at news.berkeley.edu

[Youtube]

Nice :)